Join us! Gaze Estimation Dataset Collection

29/09/2024 update

Thank you all! The dataset has been recorded and no more clip are required as for now. However, if you are interested in this topic, please don’t hesitate to contact me anyway. Many other things could be developed…

I am looking for volunteers who want to help me with the last step of my M.Sc. thesis. If you are curious, take a look down below, thank you so much for the interest!

The goal 🚀

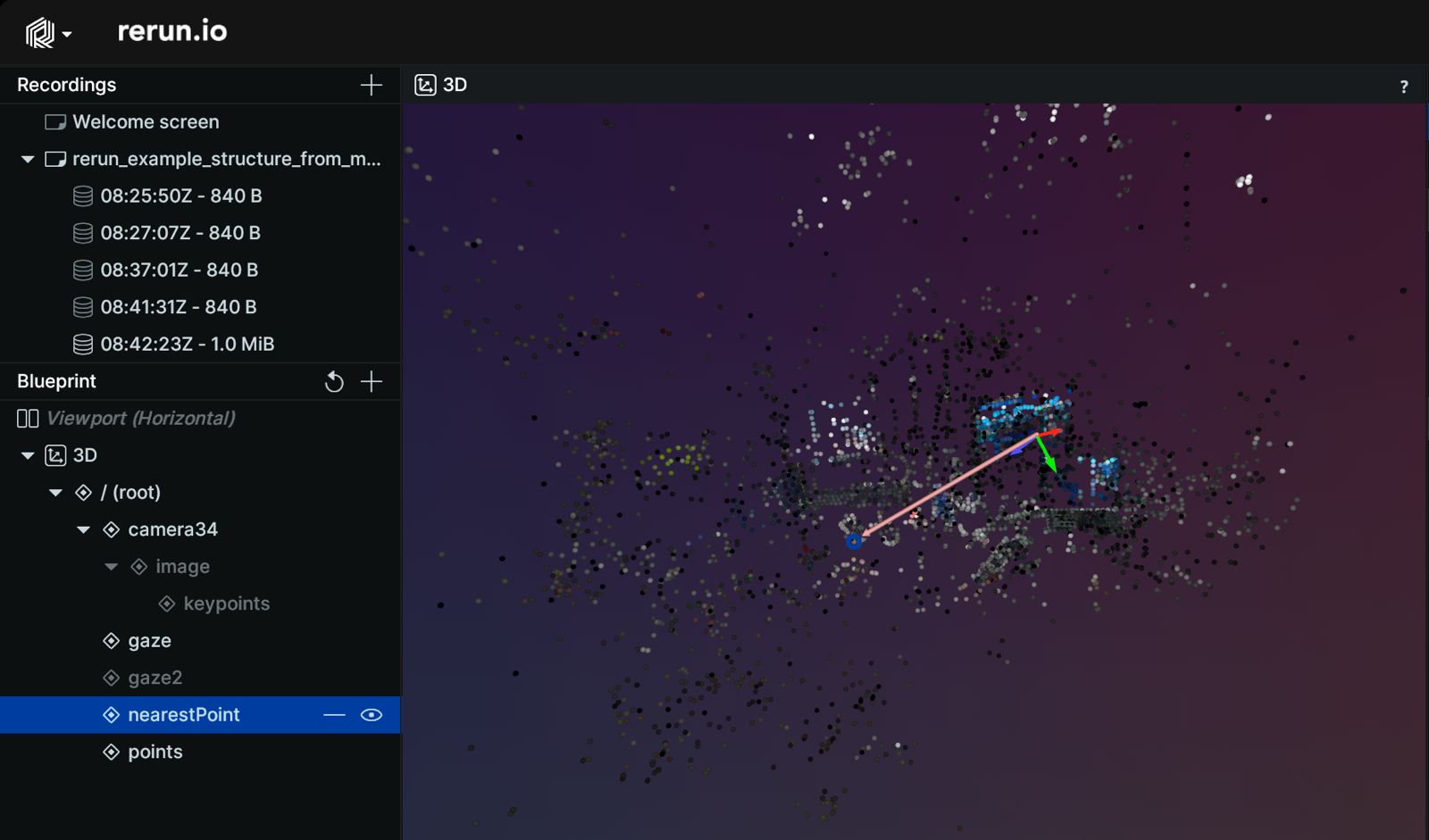

The goal of my thesis is to create a framework for doing gaze estimation in the wild, that means not in the laboratory. Using some nerdy goggles (more about them later) we can record what whoever wears them is looking at, and with my framework, we can project his/her gaze into the 3d space. Now we therefore want to record some data and elaborate them to create a dataset that can be used to benchmark other models and leverage the research in this field.

Understanding where a person is looking at is fundamental in an huge amount of fields. In human-robot interaction (HRI) gaze estimation represent a forced step to have robots more intelligent and more able to understand humans’ intentions.

The hardware 🥽

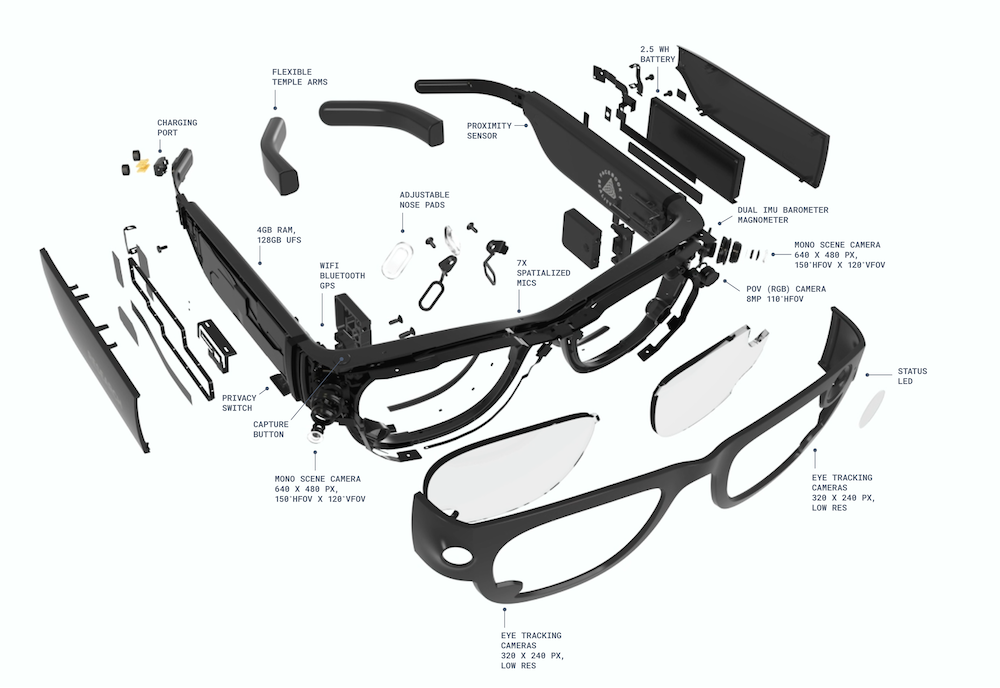

Both you and I will wear a pair of Project Aria Glasses like the one below. They can be worn as normal glasses. They have been developed by Meta’s Reality Labs for research purposes.

The advantage of these devices over alternatives is that they have 5 cameras: 2 internally for eye tracking, 2 for wide side-view, and 1 for high-res view.

What you’ll do 🤖

tldr: Just look around. Seriously.

Here is a bit longer version. You will be asked to casually look at things like objects, and trees, but also a robot. On the other hand, I will be looking at what you are doing. Both you and I will wear Aria Glasses and will record our gazes.

In this way, we will have a first-person view (FPV, your point of view) of the gazed objects, as well as an external view that will see the scene from a bit further. This second type of images will be used for the dataset itself, while the first one will be used for annotating the gaze position.

FAQ

→ How long it will take?

A recording session doesn’t last more than a few minutes. Before we start I will briefly introduce you to what we will do. The whole thing should presumably last 20 minutes.

→ When and where the recording sessions will take place?

I will be available every day starting from September 24, 2024. Just contact me and we will schedule a session.

According to your preferences, we can meet where I work, at the Department of Engineering ‘Enzo Ferrari’ in Modena, or where I live, in Mirandola (MO).

→ What do I get out?

Eternal gratitude, nothing more, nothing less. 😉 If that’s not quite enough, consider it a rare opportunity to try out exclusive research equipment from Meta, unavailable to regular consumers. Something you wouldn’t normally get to use! Jokes aside, thank you so much if you’re willing to join me!

→ I wear prescription glasses. Can I participate?

Yes, it’s not a problem. You will be asked to wear the Project Aria glasses during the recording, but during that time you don’t have to read or move. You can gaze at random objects, the recording works also if they look blurred to you.

→ What are you going to do with my images?

The goal is to collect a dataset that will be used for gaze estimation research. Possibly it may be used also by other universities and research laboratories. Some images may be used in my thesis or other future publications both mine and by others as well as in some topic-related websites. The images will never be sold commercially or in a context other than research. It will never appear your name or other data from you other than the images.

You will be asked to sign this privacy policy.

Who I am and how to contact me 📮

I am Giacomo (Jack) Salici, I am graduating in Computer Engineering M.Sc. - AI curriculum - with a thesis titled «Leveraging Gaze Estimation in Human-Robot Interaction: development of a framework for Eye Tracking in the Wild», made under the supervision of Prof. Vezzani. I won a scholarship for a PhD course in ICT and I should start later this year.

If you are interested in collaborating, please write me an email at 270385 AT studenti.unimore.it or reach me on Telegram @ jacksalici.